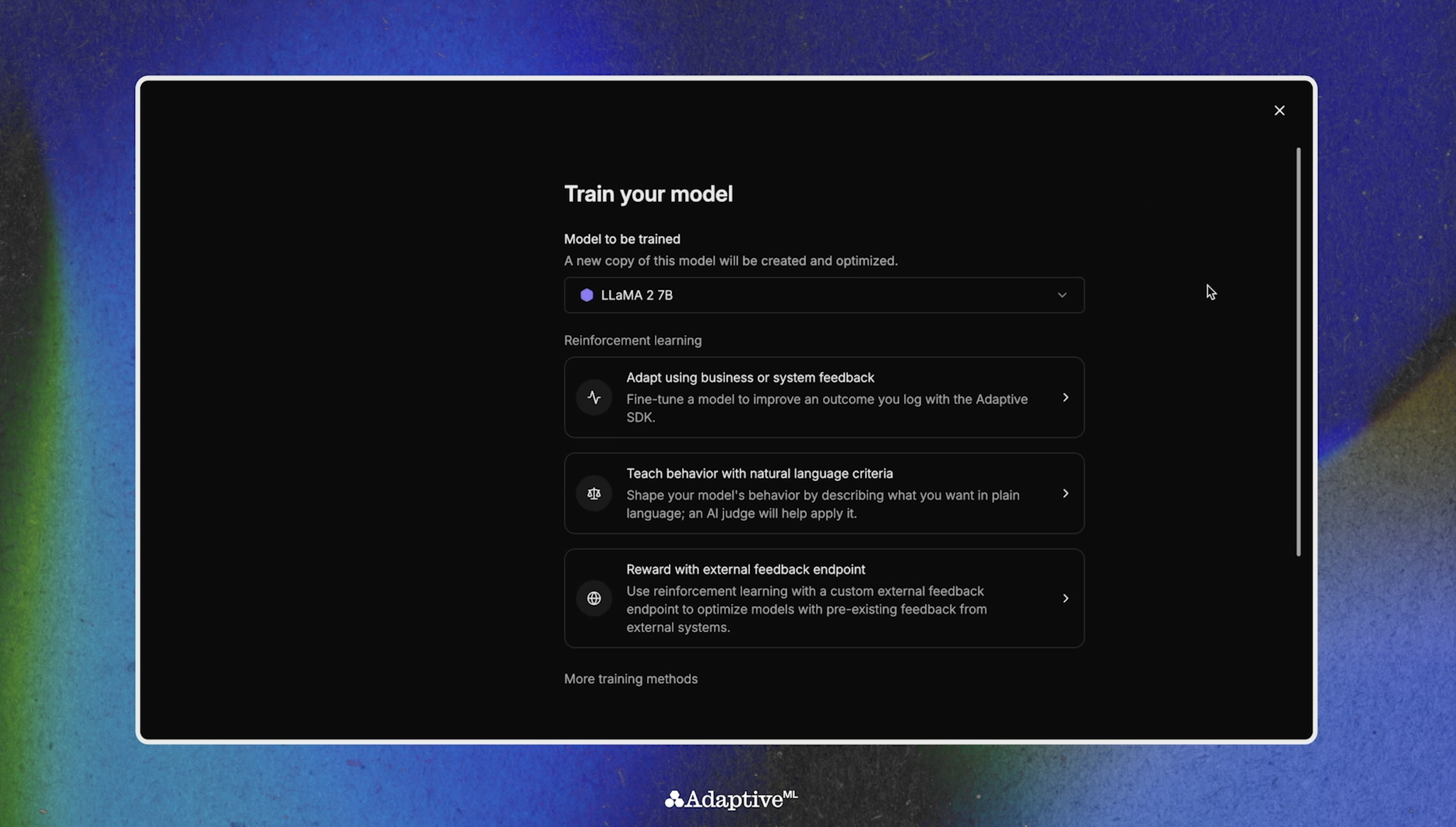

Adaptive Engine allows users to adjust the optimization algorithm for each RL training run, choosing between PPO, DPO, or GRPO— or even adjusting individual hyperparameters.

For simplicity, Adaptive Engine will recommend default settings based on the task, model, and feedback method.

However, what is the difference between these various optimization algorithms, and when should each be used?

Adaptive AI is an artificial intelligence system that continuously learns and improves over time. It adjusts to new data, behaviors, or patterns to deliver smarter, more efficient results.

GRPO is an optimization algorithm that leverages group-based comparisons to improve learning efficiency. Rather than optimizing individual policy updates in isolation, GRPO considers the relative performance of policy changes across groups of related trajectories.

The algorithm maintains multiple policy variants, using relative rankings or comparisons between these groups to guide optimization. This approach helps reduce variance in policy gradient estimates by focusing on relative improvements rather than absolute reward values.

This method has shown promise in multi-task learning scenarios and environments where reward scaling or normalization is challenging. By incorporating group dynamics into policy optimization, GRPO can achieve more stable convergence and better generalization compared to traditional individual-trajectory-based methods.

GRPO can be particularly effective in environments with sparse or noisy rewards, where direct policy gradients might be unstable. The group-based structure emphasizes consistent improvements across related experiences rather than being overly influenced by outlier trajectories. Additionally, GRPO is often valuable in multi-agent environments.

As its name suggests, DPO is differentiated in that it directly optimizes policies without requiring explicit value function estimation or actor-critic architectures. Unlike PPO and GRPO that use value functions to guide policy updates, DPO works directly with policy parameters and reward signals.

The algorithm typically uses preference-based learning or direct reward optimization to update policies, often employing techniques like reward modeling or human feedback integration.

DPO involves comparing pairs of outputs or trajectories, using these comparisons to directly adjust policy parameters in a favorable direction. This approach can be more stable and interpretable than value-based methods, as it avoids some of the complexities of value function approximation errors.

DPO excels with preference data. For example, consider training a chatbot; instead of designing a reward function for helpfulness, you can show the model pairs of responses alongside human or AI feedback. DPO directly optimizes the policy to match these preferences without needing a separate reward model.