RL is a machine learning paradigm in which models optimize for certain behaviors through large-scale trial-and-error by interacting with an environment, and receiving feedback in the form of rewards.

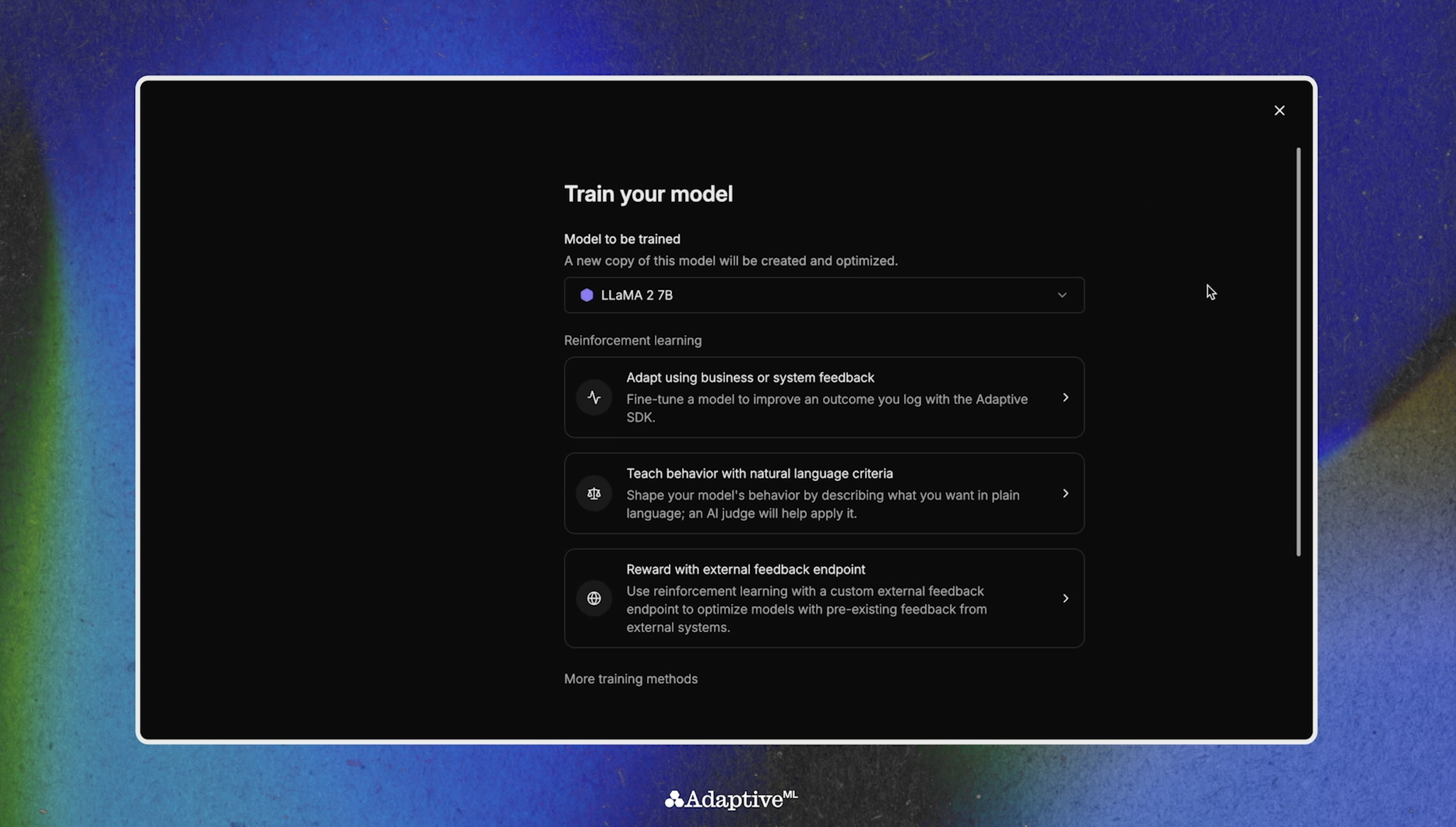

That reward function can take many forms: human, AI, or feedback endpoint. The source of feedback is the principle differentiation between RLHF, RLAIF, and RLVR.

Adaptive Engine enables users to utilize all of these methods in-concert at different parts of the tuning pipeline.

The first, and most obvious, candidate for a reward function is us: humans. In RLHF, the trainee model is provided with a set of prompts, and produces many responses. Human annotators read these responses and provide feedback, usually in the form of preference—i.e. response A is better than response B.

Armed with this preference data, RLHF is adept at aligning model output with the way humans want to receive information, in addition to the information they want to receive. RLHF is often useful for tuning LLM responses to be more helpful, polite, and on-topic.

It is also widely used to teach safety standards and content moderation; boundaries on sensitive topics, acceptable language, or societal issues are deeply nuanced and context-dependent. Human annotators can help capture the contours of this fraught landscape.

RLHF is also useful for incorporating subject matter expert (SME) feedback. Small nudges from experienced practitioners can make a big difference in user experience and model credibility.

The principal issue with RLHF is scalability. It’s both time- and labor-intensive for human annotators to provide feedback on hundreds or thousands of samples. To improve rapidly, models need faster, more efficient methods of reward assignment.

Instead of humans supplying the feedback to our trainee model, we can have another LLM assign rewards based on a given set of criteria. This removes the burden of manual reward assignment by automating it to an AI judge or reward model.

With RLAIF, the AI judge is provided with a set of guidelines or constitution for how the trainee model should behave. The AI judge then uses these guidelines as criteria to evaluate model completions, comparing many completions from the same model, or completions from different models to form an evaluation.

RLAIF massively accelerates post-training. The same judge can label new domains or refresh old ones without additional raters, making continual training easier. Additionally, a single judge eliminates inter-annotator disagreement and yields more repeatable, stable reward signals.

Caution should be exercised, however, as RLAIF can lead to ‘reward hacking,’ a phenomena where models learn to game the judge’s quirks rather than producing genuinely better answers. Additionally, there’s some risk with unchecked RLAIF that underlying biases within the AI judge can be magnified within the trainee model.

RLVR is a post-training strategy in which the reward signal comes from automatic, programmatic checks that can say with certainty whether the trajectory is correct or incorrect.

If the output passes the check, it gets a reward; if it fails, the reward is 0. Because the judgment is produced by deterministic code rather than a human or another model, the reward is said to be verifiable.

A few common examples of RLVR are mathematics, code generation, logical proofs, and schema correction. For all of these tasks, the model output can be checked against some dataset of correct results, and receive positive or negative signals through a feedback endpoint.

It may seem that RLVR can be used only to improve very specific functions (i.e. code generation), but research has shown that RLVR can provide the ‘aha’ moment of reasoning, teaching models to backtrack, self-test, and explore novel paths.